Blockchain Data Observability 101

Web3 engineers are mainly left in the dark when it comes to observing decentralized applications.

Jericho is the land of web3 founders. Meet, learn & build with 400+ hand-picked founders from 40+ countries.

This article was co-written with Blocktorch. They are on a mission to give web3 builders superpowers by building the first web3 observability platform where engineers can get relevant information at the right time.

The development of web3 is growing at a rapid speed. While the number of decentralized applications reaching scale is increasing, the architecture of decentralized applications is getting more and more complex.

Web3 engineers are mainly left in the dark when it comes to observing decentralized applications. To ensure the reliability of complex software running on a high scale, observability practices are indispensable, and without them, teams have sacrificed engineers for incidents constantly.

First, what is observability?

The overarching goal of observability is to bring better visibility into systems.

No complex system is ever fully healthy and distributed systems are unpredictable. Without observability, assumptions about system behavior are being made, leading to guesswork when trying to improve performance and stability.

Observability requires (1) the ability to capture data including context, and (2) the ability to query that data iteratively in order to find new insights. To achieve both, there are three core pillars of observability: event logs, metrics, and traces.

Simply getting these three different data types is only of limited value. It’s important to also generate insights out of them including alerting, visualization, and the ability to anticipate the future. While it’s tempting to “measure everything”, this creates an excess of information that results in no further insights and costly infrastructure expenditures. Knowing what to measure and how to measure it is critical.

Log well. Live well.

Logs are transactions. They are the events of the blockchain.

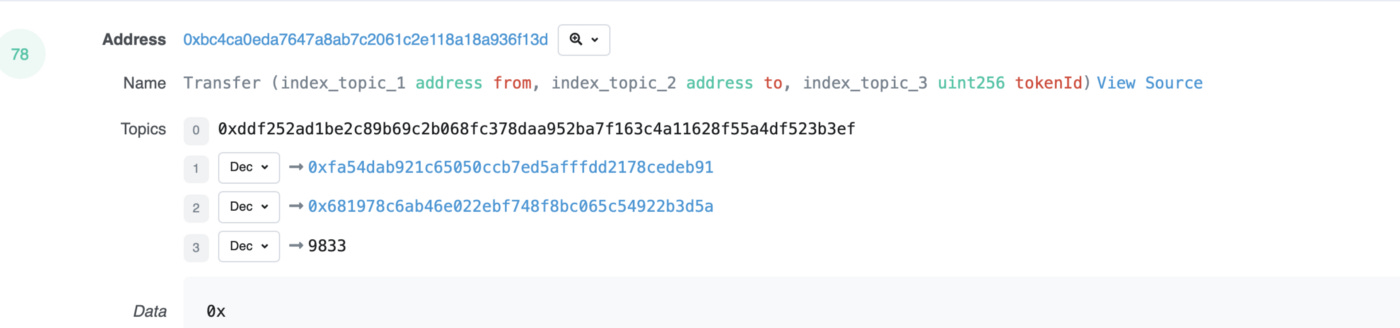

Logs come in the form of plain text, JSON, or binary. Etherscan is a great web3 tool to observe logs.

The process of gathering, processing, storing, and analyzing event data about system performance is called logging. It helps engineers catch issues as they arise by navigating them through Errors, Warnings, and Info messages. Modern log management systems go beyond using logs for the pure generation of information, they also automatically aggregate, index, and analyze them to provide insights to teams and present them to data pipelines and other utilities.

Fun fact: In ancient times, the only way to measure a ship’s speed was to throw a wood log into the water and observe how fast it moves away from the ship. This method of a ship’s progress through the water was called ‘Heaving the Log’. The log-book was the ledger with daily entries about the particulars of the ship’s voyage, including the rate of progress as indicated by the log. Logging was born.

In Solidity, events act like the logging functionality of other languages, but instead of logging to the console or to a file, the logs are saved in the blockchain. When an event is called the arguments are stored in the transaction log. These logs are associated with the address of the contract and can be read from the blockchain as long as the block is accessible.

The first part of a log record consists of an array of topics. These topics are used to describe the event. The second part of a log record consists of the data. In terms of gas spending, events are also a cheap way of storing on-chain data.

Logging in web2 vs. web3

When writing logs, it is important to make them meaningful and actionable, but, at the time of writing the logs, one does not know what exactly will be needed for troubleshooting in the future. However, there are some best practices from web2 that one can follow also in web3:

Meaningful log entries are not cryptic, keep in mind that when making use of the log the context at the time of writing will be absent.

Log messages should be written in a language everyone on the team can understand, so most probably English.

The message should contain context to make it easier to troubleshoot.

Keep in mind that not only humans but also machines should be able to read the log message, so they should be human readable as well as machine parseable.

And finally, do NOT log sensitive information like passwords, private keys, etc.

The major difference between logging in web2 and web3 is the amount of logging.

While it is never good to log way too much as it creates noise, there should be a sufficient amount of logs with a sufficient amount of information included. In web2, devs tend to rather log too much, then they have to cut some of the logs that are actually not relevant.

In web3, every bit of information in a smart contract will increase the amount of gas incurred. That leads to rather leaving out events at all if they are not crucial for triggering actions in the dApp. There are two kinds of logs in web3:

one as already stated with the event keyword in Solidity

one in the form of messages displayed when using the conditional require() function.

Notice that ONLY the require() function allows for a message, the conditional error functions revert() and assert() do not. When using events in Solidity a maximum of three parameters can be used, so these three used parameters should be carefully considered. A possible way of increasing logs from one function is to emit more than one event in that function (at the cost of higher gas fees).

Ways of using logs in web3

There are three main ways today to see events emitted by smart contracts:

Look them up manually on a block explorer like Etherscan. The following steps can be followed to see the logs of a transfer of a BAYC as in the code example above on Etherscan:

Open the transaction you want to take a look at

Navigate to “Logs”

The second entry represents the logs of the Transfer event

Looking up logs on a block explorer might be a suitable fast solution if you only want to see the logs of one specific transaction, but it is not scalable at all.

Listen to events emitted through a script with the help of libraries like web3.js or ethers.js.

The prerequisite is to have the Application Binary Interface (ABI) of the smart contract together with the smart contract address, otherwise, the events cannot be decoded. If the smart contract is verified and published on a block explorer this ABI is visible to everyone, otherwise, only the deployer of the smart contract has access to the ABI.As shown below, the contract first needs to be instantiated with the ABI and contract address. Afterward one can listen to Transfer events emitted by this contract and in our example display them in the console or store them in a database for further usage. The ones interested can find a more detailed explanation about ABIs from our friends at Alchemy here.

import CONTRACT_ABI from './BAYCabi.json'const Web3 = require('web3'); const INFURA_KEY = "SECRET_INFURA_KEY";// Insert your own key here const web3 = new Web3('wss://mainnet.infura.io/ws/v3/' + INFURA_KEY); const CONTRACT_ADDRESS = '0xBC4CA0EdA7647A8aB7C2061c2E118A18a936f13D';const contract = new web3.eth.Contract(CONTRACT_ABI, CONTRACT_ADDRESS);async function getEvents(){ contract.events.Transfer() .on('data', (event) => { console.log(event); }) .on('error', console.error); }getEvents();Use “off-the-shelf” indexing solutions like TheGraph

It is possible to either use a subgraph (The Graph’s name for open APIs) that has been built and published by someone else or build your own subgraphs. Covering the details on how this works would cover a whole blog by itself but we recommend checking out their documentation or content from Nader Dabit. If you are interested in learning more about an indexer for the Solana blockchain check SolanaFM.

Logs and log management are important to ensure engineers can understand the health and performance of a system. Logging should be taken into consideration at every step of the software development lifecycle. If you wish for logs once things get rough it is too late. Even though logging in web3 is different from web2, the core principles of what logs should contain stay the same.

Metrics don’t lie.

Metrics represent logs numerically over intervals of time.

They can be used for dashboard visualization & mathematical modeling, and enable longer retention & easier querying. Metrics are well suited for observing the overall health of a system. Dune is a great web3 tool to visualize metrics.

Metrics — numbers that tell a story

Due to the fact that a metric gets measured over time, the measurements are stored in a time series database. Each metric in a time series database contains a name, a label, a numeric value, and an exact timestamp in milliseconds.

Metrics do play a key role in alerting, and thus are the underlying fundamental pillar of observability for Service Level Agreements, Service Level Objectives, and Service Level Indicators. The core metrics used for monitoring the overall state of a system can be grouped into the following categories:

Availability

Health

Request rate

Saturation

Utilization

Error rate

Latency

While one can try to measure everything and go wild with building metrics, one should think carefully about which metrics are the right ones for the underlying purpose.

If the metrics you are looking at are not useful in optimizing your strategy, stop looking at them. — Mark Twain

Metrics stay metrics - in web2 web3 and, yes Jake, even in web5

When thinking about which solution to adopt for metrics monitoring the core features that should be included are the collection of events with a timestamp and value, efficiently storing them at volume, supporting queries of them, offering visualization capabilities, and potentially also providing endpoints to other APIs like Grafana.

Here are some vendor choices:

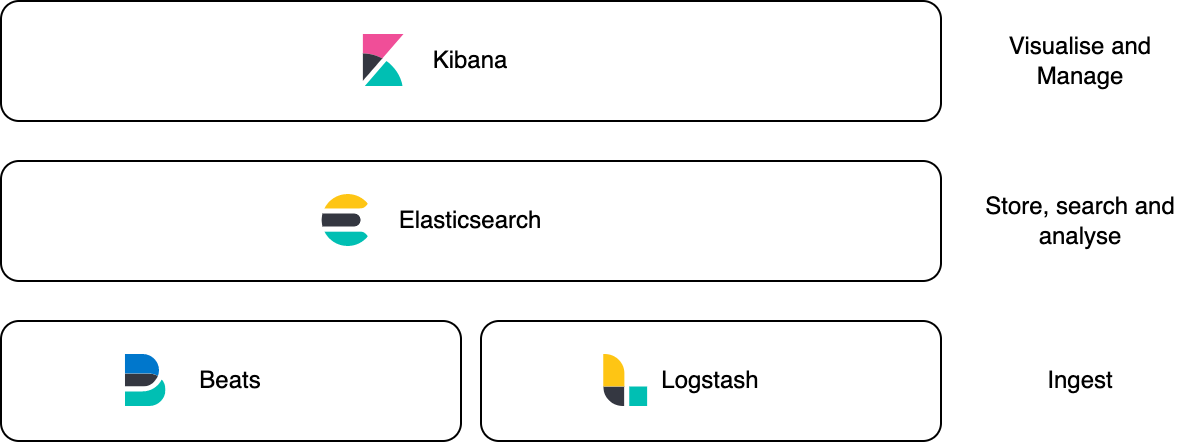

1. ELK stack (aka elastic stack)

The ELK stack is a popular choice, due to the mature products elastic offers. starting with Beats and Logstash to collect different types of metrics. Elastic offers a suite of tools and plugins to support data collection from many web2 components and infrastructure environments, in addition, Elasticsearch is powerful to store string data, search it and manage it. Moreover, Kibana offers a powerful tool to search, analyze and visualize data with low configuration effort. The diagram below shows the structure of the ELK stack.

2. TIG stack (Telegraf, InfluxDB, Grafana)

The TIG stack relies on influx DB and its ecosystem to create ETL pipelines for metrics. Influx offers Telegraf; a plugin-driven server to collect data. Many plugins are available for popular data sources, and adding new plugins is fairly easy.

In addition, the time-series DB influx offers relies on the push mechanism where Telegraf or any data other data collector can push data to. This means integration with influxDB doesn’t require much configuration except using influx’s SDKs, Telegraf, or any data collection tool (you can even upload CSV files manually)

3. Prometheus + Grafana

A popular choice to collect metrics is Prometheus, which also offers a suite of tools that are vendor agnostic. Prometheus relies on the pull mechanism to scrape data. This means that an application/service has to expose an endpoint where Prometheus will call regularly to collect metrics. But if you prefer sticking to the push mechanism, there is a push gateway to wrap Prometheus with. Thus your service/application can push data and Prometheus will scrape it from the gateway. You can find more information in the official docs.

4. Opentelemetry

The premise of Opentelemtry (OTel) is the creation of a vendor agnostic protocol to handle logs, metrics, and traces. the project is open source and part of the cloud-native Foundation (CNFC).

The protocol can be used through the open source instrumentation SDKs where you can send observability data to any storage and visualization solution. The project also offers a collector, that you can deploy in your as a sidecar. With the collector, advanced operations such as sensitive data filtering are supported. In addition, most observability vendors have support for OTel.

The learning curve for OTel might be steep at the beginning, but the unified pipeline for logs, metrics, and traces is worth it.

5. Custom pipeline + time series DB + visualization solution

If you want to quickly set up a periodic pipeline that quickly brings the data to Grafana where you can experiment with it, Blocktorch’s last blog article can be helpful :)

Metrics in web3

A metric in web3 follows the same principles as in web2. Blockchain data is public data and each entry in this distributed ledger already has a timestamp. So once the data pipeline and warehouse are set up one can start playing around with metrics.

There are some frequently used metrics to measure usage/engagement, like Total Value Locked (TVL) in DeFi, the number of wallets interacting with a certain smart contract, or also simply the total number of transactions in a given time period.

One tool that provides great functionality for anyone who is interested in such usage metrics is Dune. Anyone can build dashboards in Dune and share them with the world. The only needed skill is SQL. Below is an example by Dune user lightsoutjames about daily users of STEPN.

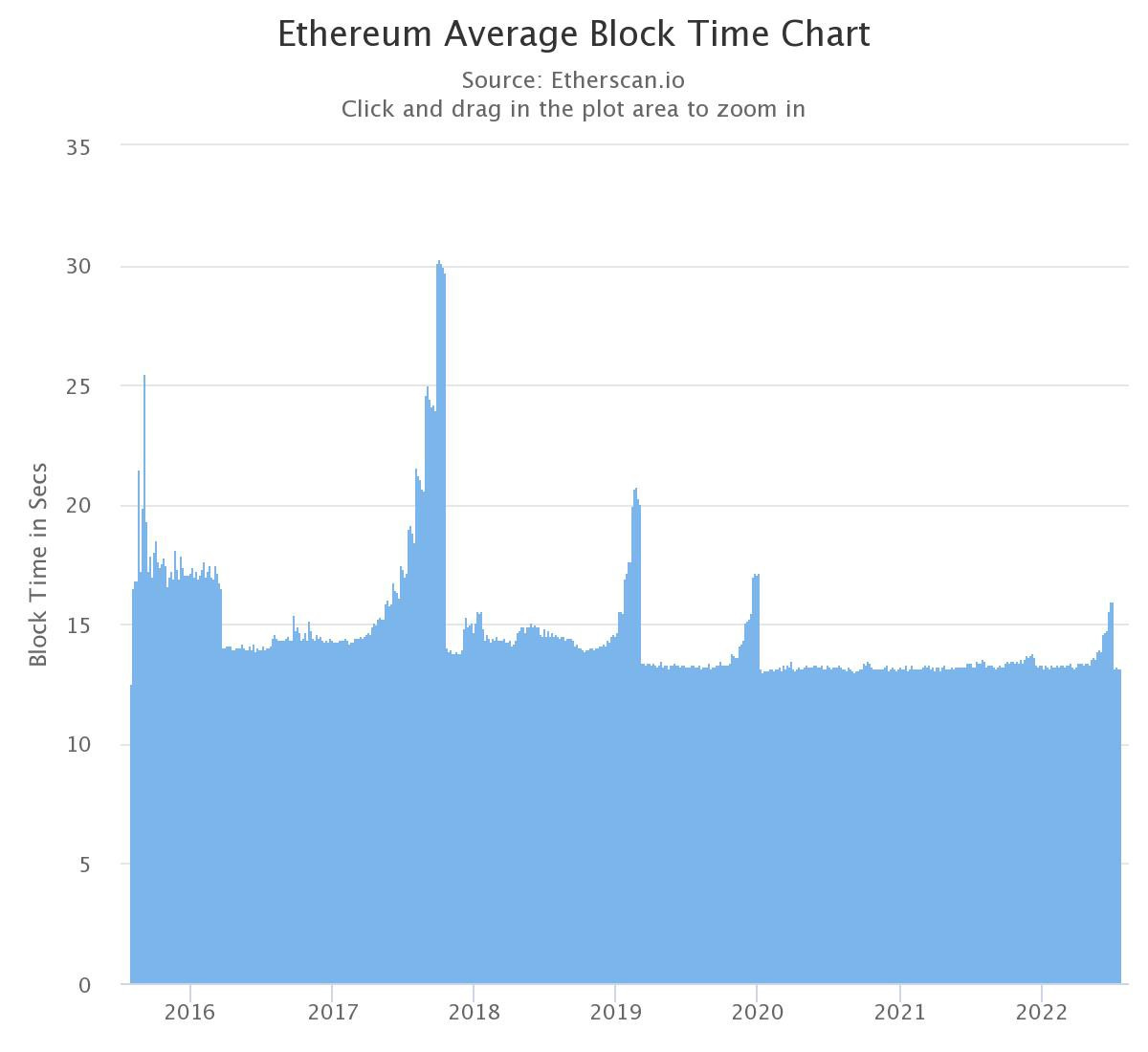

Another set of metrics in web3 are all metrics related to the specifications of transactions, event logs, gas burn, the time elapsed until confirmation, or input/output statistics. Block explorers like Etherscan offer APIs to access such data as well as some statistics like the average gas price. Below is an example of Etherscan’s chart for the average block time on Ethereum.

Node providers like Alchemy also offer some analytics and dashboarding together with their infrastructure APIs. Usage analytics can be seen like the number of transactions, but also app health metrics like the latency of API calls to Alchemy nodes and error rates.

For more sophisticated metrics such as cross-chain measurements, input/output anomalies, execution metrics, or advanced event analytics the only solution today is to build a solution from scratch on your own. But no worries Blocktorch will change that ;)

Follow the traces.

Traces provide context.

Imagine you are Sherlock Holmes on a quest and you find single pieces of evidence then you need to put the puzzle pieces together. Tracing helps you to understand how to make the puzzle pieces fit.

For example, the transaction to buy an NFT on OpenSea doesn’t happen directly with the NFT contract, but it goes throw a series of transactions that can be traced as shown below.

Tracing is the hardest of the pillars to retrofit into existing infrastructure because to implement really effective tracing, every component in the path of a request needs to propagate tracing information.

Understanding traces

There are two different kinds of relevant traces:

A stack trace is a representation of a call stack at a certain point in time, containing all invocations from the start of a thread until an exception.

A distributed trace is the observation of the end-to-end path of causally related distributed events flowing through a distributed system. By following a single trace, the path traversed by the request and the structure of the request can be derived.

The first allows developers to see which services are involved in the request, the latter to understand the junctures and effects of asynchrony in the execution of a request. Without tracing, pinpointing the root cause of issues in a distributed system is very challenging.

Each distributed trace is composed of a number of spans: representations of an operation within the flow of the request and contains a stack trace. The glue that holds all spans together as a full trace is called context propagation, which helps to correlate the spans with each other. These traces are usually visualized in waterfall diagrams, flame charts, or Gantt charts.

The most modern framework used in web2 for distributed tracing, and in general observability, is OpenTelemetry. It contains a set of APIs that are vendor-agnostic, with a common format to collect and send data. For visualizing OpenTelemetry trace data open-source software like Jaeger or Zipkin can be used. Once OpenTelemetry is set up the data can also be ingested by vendors like Datadog, Dynatrace, or Splunk.

Tracing in web3

In web3, the only kinds of traces that exists today are stack traces for transactions and mined blocks.

There is no way to follow a transaction from the UI to the persistence layers with ample context (something Blocktorch is tackling). If for example, an action in the UI is triggering two different transactions on the blockchain (for example in a Bridge there is 1 transaction on each chain), there is no way to see a full trace.

In practice, some teams are using web2 observability tools for tracing requests up to the point where a request flows from the centralized components to the decentralized components, and from there the request is disappearing in the dark.

To obtain the stack trace of an EVM transaction, GETH’s traceTransaction RPC API can be used. Alternatively, some nodes as service providers like Alchemy also offer a trace API endpoint. The simplest type of transaction traces that Geth can generate are raw EVM opcode traces. Below is an example as shown in Geth’s documentation:

> debug.traceTransaction("0x2059dd53ecac9827faad14d364f9e04b1d5fe5b506e3acc886eff7a6f88a696a")

{

gas: 85301,

returnValue: "",

structLogs: [{

depth: 1,

error: "",

gas: 162106,

gasCost: 3,

memory: null,

op: "PUSH1",

pc: 0,

stack: [],

storage: {}

},

/* snip */

{

depth: 1,

error: "",

gas: 100000,

gasCost: 0,

memory: ["0000000000000000000000000000000000000000000000000000000000000006", "0000000000000000000000000000000000000000000000000000000000000000", "0000000000000000000000000000000000000000000000000000000000000060"],

op: "STOP",

pc: 120,

stack: ["00000000000000000000000000000000000000000000000000000000d67cbec9"],

storage: { 0000000000000000000000000000000000000000000000000000000000000004: "8241fa522772837f0d05511f20caa6da1d5a3209000000000000000400000001",

0000000000000000000000000000000000000000000000000000000000000006: "0000000000000000000000000000000000000000000000000000000000000001",

f652222313e28459528d920b65115c16c04f3efc82aaedc97be59f3f377c0d3f: "00000000000000000000000002e816afc1b5c0f39852131959d946eb3b07b5ad"

}

}]

To see the stack trace of a transaction without having to do any API call Tenderly is a great tool for getting the information quickly. In Tenderly’s explorer, one can search for a transaction hash and the transaction stack trace is provided as part of the result. This is how the transaction stack trace looks like for a transfer in the UniSwap contract, showing all internally called functions:

Without distributed tracing understanding the context of a request flowing through services is almost impossible. If Sherlock Holmes would have to look at each clue as siloed information, he would have never made it to one of the most famous detectives. To level up engineering practices in web3, distributed tracing through the multi-chain decentralized stack is required.

Please drop us a like if you enjoyed the article, comment below if you have any feedback, and share it on Twitter if you found it useful.

--

The Blocktorch team & Vlad